---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: default

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: default

namespace: monitoring

Применим node exporter

---

apiVersion: v1

kind: Service

metadata:

name: node-exporter-svc

namespace: monitoring

spec:

ports:

- name: tcp

port: 9100

protocol: TCP

clusterIP: None

selector:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: node-exporter

sessionAffinity: None

type: ClusterIP

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: node-exporter

name: node-exporter

namespace: monitoring

spec:

selector:

matchLabels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: node-exporter

template:

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: node-exporter

spec:

containers:

- args:

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

- --no-collector.wifi

- --no-collector.hwmon

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/docker/.+|var/lib/kubelet/pods/.+)($|/)

- --collector.netclass.ignored-devices=^(veth.*)$

name: node-exporter

image: prom/node-exporter

ports:

- containerPort: 9100

protocol: TCP

resources:

limits:

cpu: 250m

memory: 180Mi

requests:

cpu: 102m

memory: 180Mi

volumeMounts:

- mountPath: /host/sys

mountPropagation: HostToContainer

name: sys

readOnly: true

- mountPath: /host/root

mountPropagation: HostToContainer

name: root

readOnly: true

volumes:

- hostPath:

path: /sys

name: sys

- hostPath:

path: /

name: root

Подключимся на под prometeus и проверим получения метрик

root@prometheus-server-865cc64c6c-5znrs:/opt/bitnami/prometheus# curl -s node-exporter-svc:9100/metrics

# TYPE node_softnet_processed_total counter

node_softnet_processed_total{cpu="0"} 1.24508272e+08

node_softnet_processed_total{cpu="1"} 1.2089665e+08

node_softnet_processed_total{cpu="2"} 9.3901353e+07

node_softnet_processed_total{cpu="3"} 9.1202167e+07

node_softnet_processed_total{cpu="4"} 8.7404825e+07

node_softnet_processed_total{cpu="5"} 1.71663948e+08

# HELP node_softnet_times_squeezed_total Number of times processing packets ran out of quota

# TYPE node_softnet_times_squeezed_total counter

node_softnet_times_squeezed_total{cpu="0"} 52

node_softnet_times_squeezed_total{cpu="1"} 58

node_softnet_times_squeezed_total{cpu="2"} 61

node_softnet_times_squeezed_total{cpu="3"} 52

node_softnet_times_squeezed_total{cpu="4"} 56

node_softnet_times_squeezed_total{cpu="5"} 68

Приведем конфиг prometeus к таком виду и перезапусим под

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

scrape_configs:

- job_name: "prometheus"

static_configs:

- targets: ["localhost:9090"]

- job_name: "haproxy"

static_configs:

- targets: ["haproxy-exporter-svc:9101"]

labels:

alias: "haproxy"

- job_name: "mysql"

static_configs:

- targets: ["mysql-exporter-svc:9104"]

labels:

alias: "mysql"

- job_name: "node"

static_configs:

- targets: ["node-exporter-svc:9100"]

labels:

alias: "node"

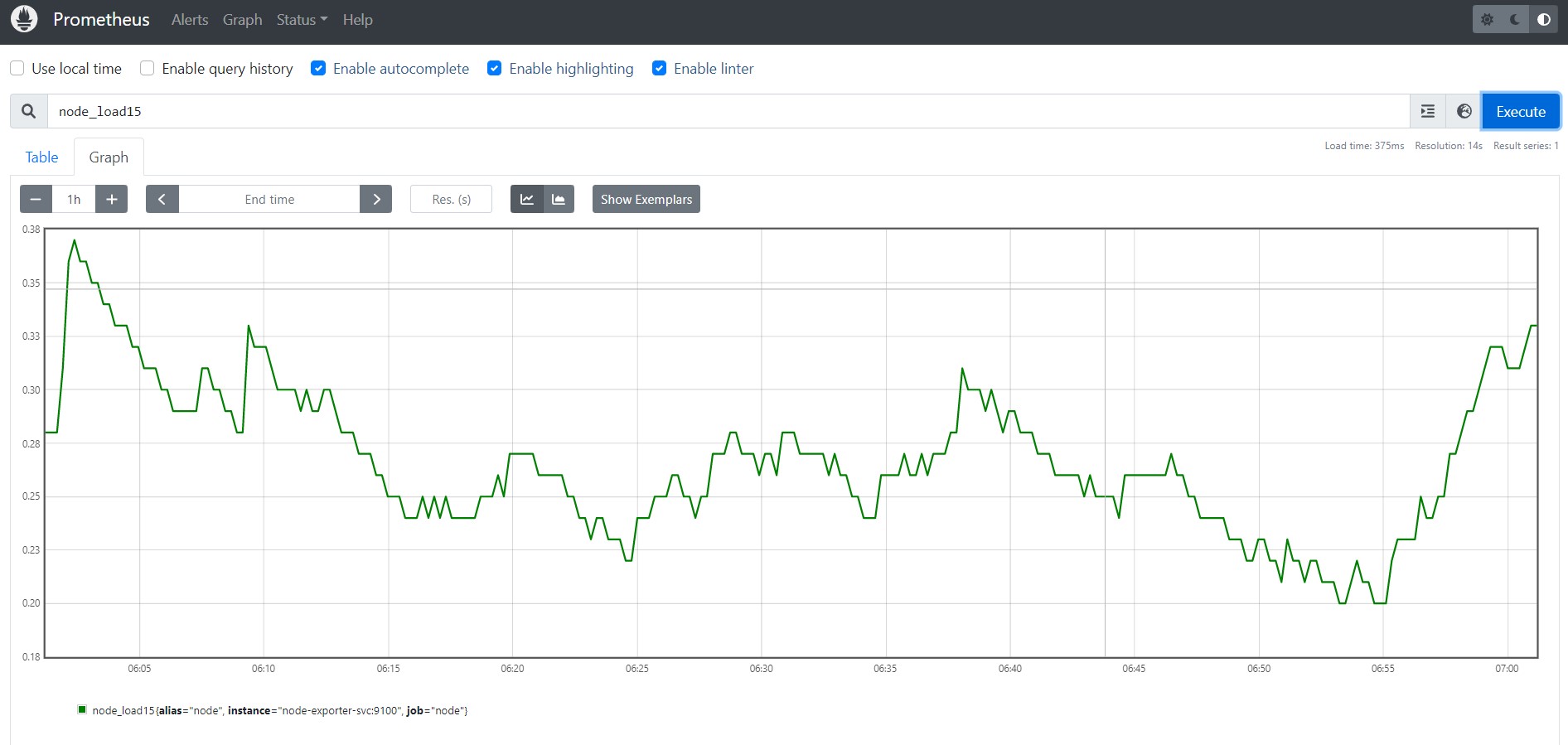

Проверим отображения метрик

PS Дальше делаем отображение в grafana, шаблон можно найти в интернете